|

| Figure 1. Block Diagram of the Titan FPGA Design Using Reveal to Capture TLP messages. |

pyhwtest is a great little utility. With it I can access the memory space on a PCIe card using python. Everything from simple read/writes to DMA transactions work. With pyhwtest I don't have to write a kernel driver. To use it, all that is required is to find the base address for the BAR that I want to access. Listing 1 (below) shows the output of lspci: BAR0 is mapped to 0xDC000000.

[root@localhost src]# lspci -d dead:* -v

04:00.0 Non-VGA unclassified device: Indigita Corporation Device beef (rev 06)

Flags: bus master, fast devsel, latency 0, IRQ 10

Memory at dc000000 (32-bit, non-prefetchable) [size=256]

Memory at d8000000 (32-bit, non-prefetchable) [size=64M]

Capabilities: [50] Power Management version 3

Capabilities: [70] MSI: Enable- Count=1/1 Maskable- 64bit+

Capabilities: [90] Express Endpoint, MSI 00

Capabilities: [100] Device Serial Number 00-00-00-00-00-00-00-00

[root@localhost src]#

Listing 1. Linux Terminal Output Showing the Output from lspci.

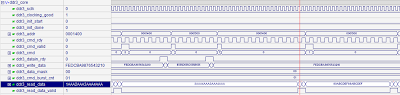

Listing 2 shows the commands required to write 0x12345678 to BAR0 (address 0xDC000000), and Figure 2 shows the Reveal capture that results. It's a good sign that the data written in pyhwtest shows up in the capture. I built a simple spreadsheet to decode the TLP packet (Table 1). From here I'll start thinking about how best to decode the TLP packets in the FPGA and the best way to handle data flow to and from the PC.

[root@localhost refresh_test]#

[root@localhost refresh_test]# python -i titan_test.py -b 0xdc000000

Base address: 0xdc000000L

>>> hwtest.writelw(BASE, le32_to_be(0x12345678))

>>>

[root@localhost refresh_test]#

Listing 2. Python Terminal Output Showing a Data Write to the Base Address of BAR0.

|

| Figure 2. Reveal Capture of the Data Write TLP Message. |

H0

| R | FMT | Type | R | TC | R | TD | EP | Attr | R | Length | |||||||||||||||||||||

| 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | |

H1

| Requester ID | Tag | Last BE | First BE | ||||||||||||||||||||||||||||

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | |

H2

| Address | R | ||||||||||||||||||||||||||||||

| 1 | 1 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

D0

| Data | |||||||||||||||||||||||||||||||

| 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 1 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | |

Table 1. Decode of Captured RX TLP Message.